Most popular container technology out there that is Docker.

Why do you need containers?

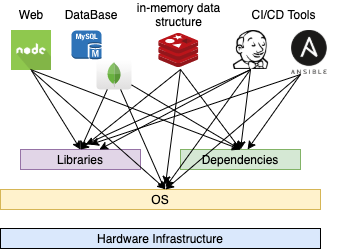

Imagine we have a lot of services such as node.js, spring boot for web server and MySQL, MongoDB for Database Redis for Messaging, and Jenkins and Ansible for CI/CD tools.

- Their compatibility with the underlying operating system.

- We should ensure that all different services were compatible with the version of the operating system we were planning to use.

- There have been times when a certain version of these services was not compatible with the version of the operating system we were planning to use.

- We have to check the compatibility between the services and the libraries and dependencies on The OS.

- We have had issues where one service requires one version of a dependent library whereas another service required another version.

- The architecture of our application changed over time.

- We have had to upgrade to a newer version of these components or change the database etc.

- Every time something changed we have to go through the same process of checking compatibility between the various components and the underlying infrastructure.

- This compatibility matrix issue is usually referred to as the Matrix from Hell.

- With a new developer on board, we found it really difficult to set up a new environment.

- The new developers have to follow a large set of instructions and run hundreds of commands to finally set up their environments.

- They had to make sure they were using the right operating system, the right versions of each of these components, and each developer had to set all that up by himself each time.

- We also had different development, test, and production environments.

- One developer may be comfortable using one OS and the others may be using another one.

Thus, we needed something that could help us with the compatibility issue. something that would allow us to modify or change these components without affecting the other components and even modify the underlying operating system as required.

So, That is why docker is needed.

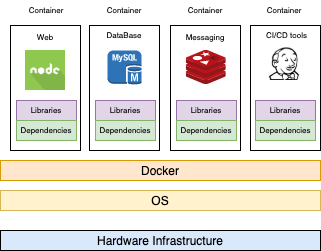

With Docker, you were able to run each component in a separate container with its own libraries and its own dependencies all on the same VM and the OS but within separate environments or containers. We just have to build the docker configuration once and all our developers could now get started with a simple docker run command. Irrespective of what the underlying operating system they run, all they needed to do was to make sure they have docker installed on their systems.

What are the containers?

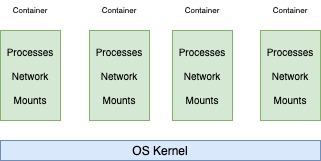

Containers are completely isolated environments. As in they can have their own processes or services, their own networking interfaces, their own mounts just like virtual machines. Except they all share the same operating system Kernel.

It is also important to know that containers are not new with Docker. Containers have existed for about 10 years now and some of the different types of containers are LXC, LXD, and LXCFS etc. Docker utilize LXC containers. Setting up these container environments is hard as they are very low level and that is where a docker offers a high level tool with several powerful functions.

Basic concepts of the operating system

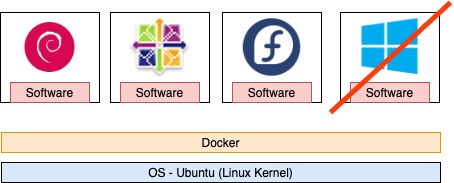

If you look at operating systems like Ubuntu, Fedora, and CentOS, they all consist of two things. An OS Kernel and a set of software. The operating system kernel is responsible for interacting with the underlying hardware while the OS kernel remains the same which is Linux in this case, its the software above it that makes these operating systems differ. The software may consist of a different UI, drivers, compilers, file managers, developer tools etc. So you have a common Linux Kernel shared across all operating systems and some custom software that differentiates operating systems from each other.

Sharing the Kernel

If the underlying operating system is Ubuntu, docker can run a container based on another distribution like Debian, Fedora, or CentOS. Each docker container only has additional software. So, what is an OS that did not share the same kernel? For example, windows, you can not be able to run a Windows-based container on a docker host with Linux OS on it. you need docker on a Windows server.

Is not that a disadvantage?

Unlike hypervisors docker is not meant to virtualize and run different operating systems and kernels on the same hardware. The main purpose of docker is to containerize applications and to ship them and run them.

Virtual Machines vs Containers

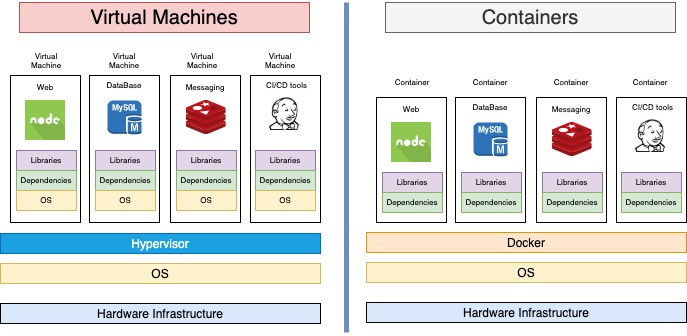

In the case of docker, we have underlying hardware infrastructure, then the operating system and Docker installed on the OS. Docker can then manage the containers that run with libraries and dependencies.

In the case of virtual machines we have the OS on the underlying hardware, then the hypervisor like ESX or virtualization of some kind, and then the virtual machines. So, a virtual machine has its own operating system inside it.

| Virtual Machines | Containers | |

| Utilization | Higher utilization of underlying resources | Lower utilization of underlying resources |

| Size | Higher disk space (GB) | Lower disk space (MB) |

| Boot up | Slow | Fast |

It is also important to know that docker has less isolation as more resources are shared in containers like the kernel whereas Virtual Machines have complete isolation from each other.

Image vs Containers

Image is a package or template that you might have worked with in the virtualization world. It is used to create one or more containers.

Containers are running instances of images that are isolated and have their own environments and set of processes.