Kubernetes Cluster have a large number of pods and services.

- How are the pods addressed?

- How do they communicate with each other?

- How do you access the services running on the pods internally from within the cluster as well as externally from outside the cluster?

Kubernetes does not with a built in solution for this. It expects you to implement a networking solution that solves these challenges. But, Kubernetes have the requirements for pod networking.

Networking Model

- Every pod should have a unique IP address

- Every pod should be able to communicate with every other pod in the same node.

- Every pod should be able to communicate with every other pod on other nodes without NAT.

As long as you can implement a solution that takes care of automatically assigning IP addresses and establish connectivity between the pods in a node as well as pods on different nodes. So how do you implement a model that solves these requirements?

There are many networking solutions available out there that does this.

flannel, cilium, vmware NSX.

Try ourself.

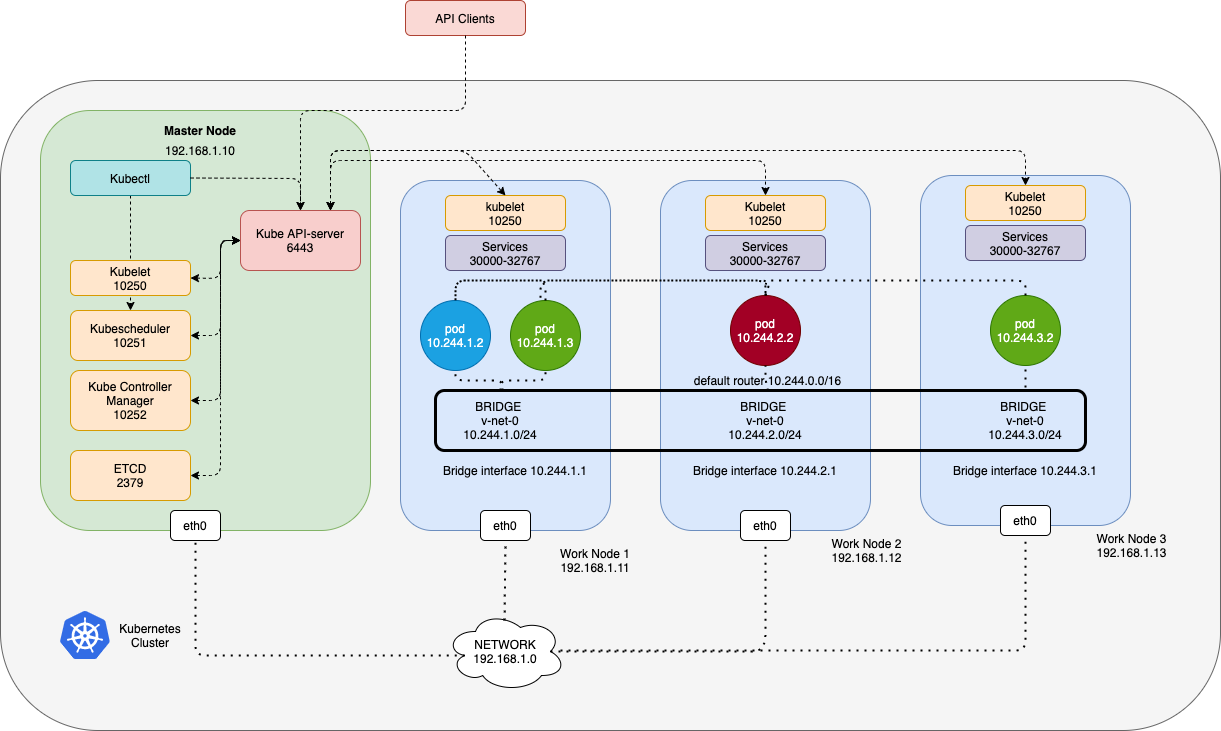

If we have a three node cluster. It does not matter which one is master or worker, they all run pods either for management or workload purposes.

- Every pod should have a unique IP address

- Every pod should be able to communicate with every other pod in the same node.

- Assign Node IP address like 192.168.1.11, 192.168.1.12, and 192.168.1.13

- When containers are created, Kubernetes creates network namespaces for them to enable communication between them. We attach these namespaces to a network. Thus, we need bridge networks on each nodes.

- Assign to bridge interfaces or networks. We decide that each bridge network work will be on its own subnet, choose any private address range.

- The ip address for the bridge interface.

- To attach a container to the network, we need a pipe or virtual network cable.

Every pod should be able to communicate with every other pod on other nodes without NAT.

6. Add routing table to route traffic For example)

node1$ ip route add 10.244.2.2 via 192.168.1.12

node1$ ip route add 10.244.3.2 via 192.168.1.13

node2$ ip route add 10.244.1.2 via 192.168.1.11

node2$ ip route add 10.244.3.2 via 192.168.1.13

node3$ ip route add 10.244.1.2 via 192.168.1.11

node3$ ip route add 10.244.2.2 via 192.168.1.12- this will require a lot more configuration, as in when your underlying network architecture gets complicated instead of having to configure a route on each node. A better solution is to do that on a router if you have one in your network and point all hosts to use that as the default gateway.

So how do we run the script automatically when a pods is created on Kubernetes?

That is way the Container network interface (CNI) comes in

CNI tells Kubernetes that this is how you should call a script as soon as you create a container, and CNI tells us this is how your script should look like.

It should have an add section that will take care of adding a container to the network and a delete section that will take cate of deleting container interfaces from the network.

If the script is ready The Kubelet on each node is responsible for creating containers whenever the container is created, the Kubelet that looks at the CNI configuration passed as a CNI configuration passed as a Command-line argument when it was run and identifies our scripts name, and then looks in the CNI’s bin directory, defined our script and then executes the script with the add command and the name and namespace id of the container.