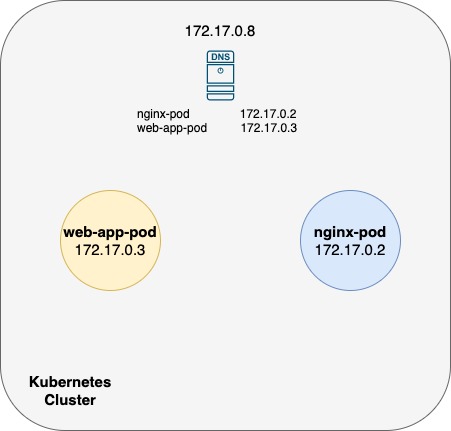

How Kubernetes Implements DNS? We need the entries into a central DNS Server like CoreDNS.

| In web-app-pod | In nginx-pod | |

| way 1 | cat >> /etc/host nginx-pod 172.17.0.2 | cat >> /etc/host web-app-pod 172.17.0.3 |

| way 2 | cat >> /etc/resolv.conf nameserver 172.17.0.8 | cat >> /etc/resolv.conf nameserver 172.17.0.8 |

The way 2 should use in Kubernetes Cluster.

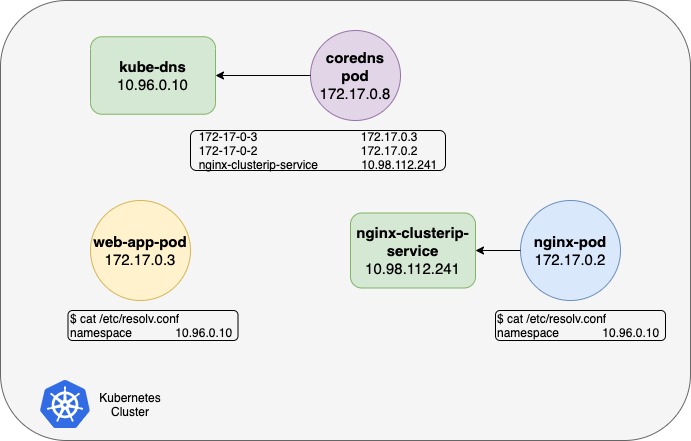

Every time a new pod is created, we should add a record in the DNS Server for the pod so that other pods can access the new pod, and configure the /etc/resolv.conf file in the pod to the Cluster DNS Server so that the pod can resolve other pods in the cluster. This is kind of how Kubernetes does it. Except that it does not create similar entries for Pods to map pod name to its IP Address. It does that for services. For pods, it forms Hostnames by replacing . with - in the IP Address of the pod. Kubernetes implements DNS in the same way. It deploys a DNS Server within the cluster.

| Hostname | Namespace | Type | Root | IP Address |

| nginx-clusterip-service | default | svc | cluster.local | 10.98.112.241 |

| 172-1172-17-0-2 | default | pod | cluster.local | 172.17.0.2 |

Prior to version 1.12 : The DNS implemented by Kubernetes was Kube-DNS. Kubernetes version 1.12: the recommended DNS server is CoreDNS.

How does the core DNS setup in the cluster?

$ kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-74ff55c5b-vjvpb 1/1 Running 5 4d22h 172.17.0.8 minikube <none> <none>- The CoreDNS server is deployed as a pod in the Kube-system namespace.

- This Pod runs the CoreDNS executable, the same executable that we ran when we deployed CoreDNS ourself.

CoreDNS requires a configuration file. as below configuration file in Kubernetes ConfigMap

$ kubectl get ConfigMap coredns -n kube-system -o yaml

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

metadata:

creationTimestamp: "2021-01-19T11:21:40Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:Corefile: {}

manager: kubeadm

operation: Update

time: "2021-01-19T11:21:40Z"

name: coredns

namespace: kube-system

resourceVersion: "256"

uid: 65e2cda2-d57a-4f39-a3f5-6bcf4620fb06In this file, you have a number of plugins configured. Plugins are configured for handling errors, reporting health, monitoring metrics, cache etc. The plugin that make CoreDNS with Kubernetes, is the Kubernetes plugin. And this is where the top level domain name for the cluster is set. kubernetes cluster.local So every recod in the coredns DNS server falls under this domain.

Also, there is pod option, pods insecure, is what is responsible for creating a record for pods in the cluster.

A recode being created for each pod by converting their IPs into a dashed format. This is disabled by default. However, it can be enabled with the entry here. Any record that the DNS server can not resolve, the pod tries to reach www.google.com it is forwarded to the nameserver specified in the core dns pods /etc/reolv.conf file. etc/resolv.conf file is set to use the nameserver from the Kubernetes Node. That is core file is passed into the pods has a ConfigMap object. If you need to modify the configuration you should edit the ConfigMap object.

| Hostname | Namespace | Type | Root | IP Address |

| 172-1172-17-0-2 | default | pod | cluster.local | 172.17.0.2 |

If the Kubernetes Cluster has coredns pod, It watches the kubernetes cluster for new pods or services, and every time a pod or a service is created it adds a record for it in its database.

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-74ff55c5b-vjvpb 1/1 Running 6 5d

$ kubectl get service -n kube-system

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 5d

$ kubectl get pod -n default -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 2 10h 172.17.0.9 minikube <none> <none>

web-app-pod 1/1 Running 2 10h 172.17.0.4 minikube <none> <none>

$ kubectl get service -n default

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-clusterip-service ClusterIP 10.98.112.241 <none> 80/TCP 10hCoreDNS connection Test

$ kubectl exec -it web-app-pod -- sh

[ root@web-app-pod:/ ]$ cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

$ kubectl exec -it nginx-pod -- sh

# cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5If you curl curl nginx-clusterip-service, it return with no error. which should happen nginx-clusterip-service.default.svc.cluster.local Because in /etc/resolv.conf has search command default.svc.cluster.local svc.cluster.local cluster.local.

[ root@web-app-pod:/ ]$ curl nginx-clusterip-service.default.svc.cluster.local

Welcome to nginx!

[ root@web-app-pod:/ ]$ curl nginx-clusterip-service

Welcome to nginx!This is only for service so, you won’t be able to reach a pod the same way. You need to specify the full FQDN of the pod.

[ root@web-app-pod:/ ]$ curl 172-17-0-9

curl: (6) Couldn't resolve host '172-17-0-9'

[ root@web-app-pod:/ ]$ curl 172-17-0-9.default.pod.cluster.local

Welcome to nginx!