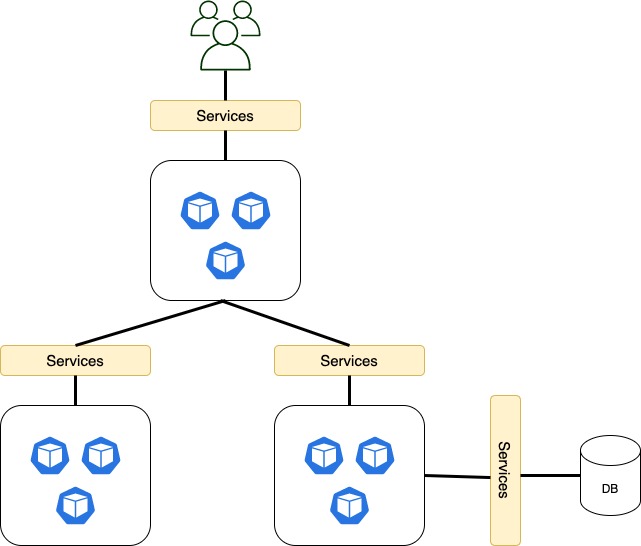

Kubernetes NodePort services enable communication between various components within and outside of the application. Kubernetes services help us connect applications together with other applications or users. For example, our application has groups of pods running various sections such as a group for serving our frontend, load to users and other groups for running backend processes, and a group connecting to an external data source. It is services that enable connectivity between these groups of pods the services enable the frontend application to be made available to end-users. Also, it helps communication between back end and front end pods and helps in establishing connectivity to an external data source. Thus, services loose coupling between microservices in our application.

Node Port Service

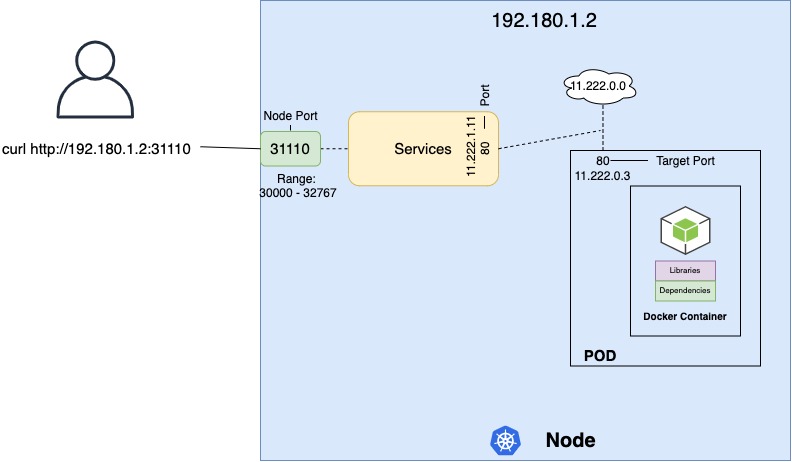

The Kubernetes service is an object just like pods, replicates, or deployments that we worked on. One of its use cases is to listen to a port on the node and forward a request on that port to a port on the pod running the web application this type of service is known as a node port service. The service listens to the port on the node and forward requests to the pods.

The service can help us by mapping a port on the node to a port on the pod. The service is in fact like a virtual server inside the node. Inside the cluster, it has its own IP address, and that IP address is called the cluster IP of the service. Lastly, Node Ports can only be a valid range which by default is from 30000 to 32767

Node Port Service

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

protocol: TCP$ kubectl apply -f nginx-pod.yaml

pod/nginx-pod createdapiVersion: v1

kind: Service

metadata:

name: nginx-nodeport-service

spec:

type: NodePort

ports:

- targetPort: 80

port: 80

nodePort: 31110

selector:

app: nginx$ kubectl apply -f nginx-nodeport-service.yaml

service/nginx-nodeport-service created

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-nodeport-service NodePort 10.104.215.34 <none> 80:31110/TCP 49s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15d 15d

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

minikube Ready master 15d v1.19.2 192.168.49.2 <none> Ubuntu 20.04 LTS 4.15.0-122-generic docker://19.3.8# If you are using minikube

$ minikube ip

127.0.0.1

$ minikube ssh

docker@minikube:~$ curl http://127.0.0.1:31110

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>In the ports section, the only mandatory field is port. If you do not provide a target port it is assumed to be the same as port. If you do not provide node port, a Freeport in the valid range between 30000 and 32767 is automatically allocated. You can have multiple such port mapping within a single service. The selector provides a list of labels to identify the pod.

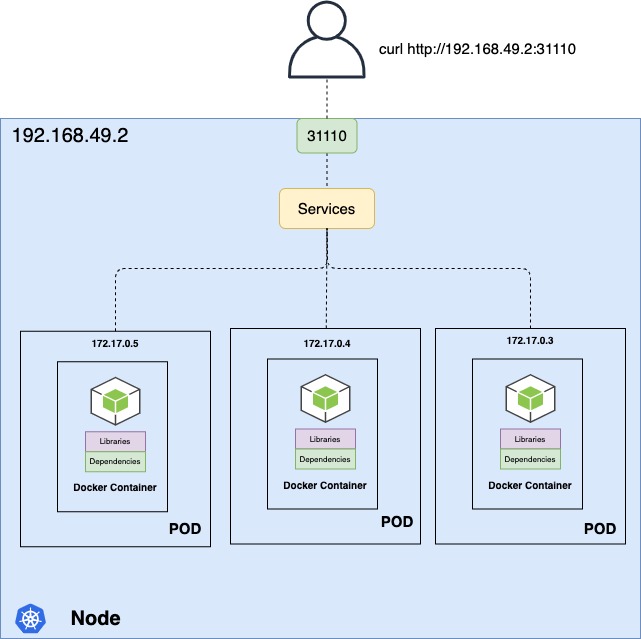

Node Port Service with multiple pods

This is a default option in Kubernetes

In the production environment, you have multiple instances of the application running for higher availability and load balancing purposes, in this case, we have multiple similar pods running our application. They all have the same labels with a key app and a set to a value apple the same label is used as a selector during the creation of the service. So, when the service is created it looks for a matching pod with the label and finds three of them. The service then automatically selects all the three pods as endpoints to forward the external requests coming from the user you do not have to do any additional configuration. It used a Random algorithm thus, the service acts as a built-in load balancer to distribute load across different pods.

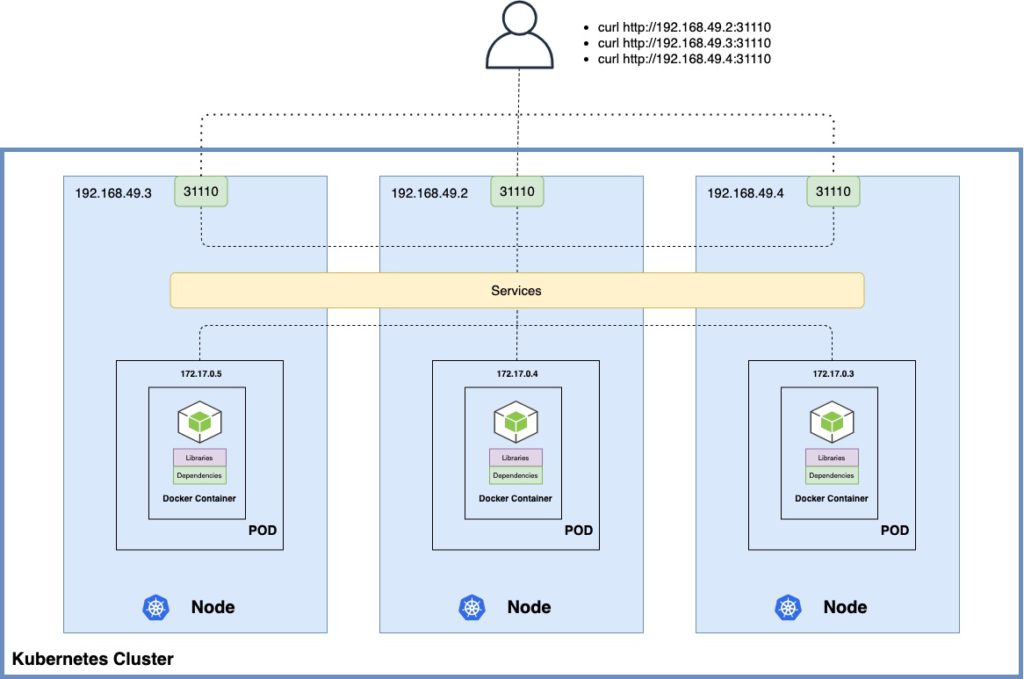

When the pods are distributed across multiple nodes. In this case, we have the application on pods on separate nodes in the cluster. When we create a service without us having to do any additional configuration Kubernetes automatically creates a service that spans across all the nodes in the cluster and maps that target port to the same node port on all the nodes in the cluster. In this case, you can access your application using the IP of any node in the cluster and using the same port number.

Thus, in any case whether it be a single pod on a single node multiple pods on a single node or multiple pods on multiple nodes the service is created exactly the same without yo having to do any additional steps during the service creation. When pods are removed or added. The service is automatically updated making its highly flexible and adaptive. Once created, you won’t typically have to make any additional configuration changes.